A Scalable Solution for Hosting Multiple Micro-Frontend Apps

In large-scale front-end projects, managing multiple micro-frontend applications efficiently is a common challenge. By leveraging NX Module Federation along with Git submodules, AWS S3, CloudFront, and Docker, we can create a scalable and maintainable architecture that allows independent teams to own, build, and deploy their micro-frontends while maintaining consistency across the system.

In this article, we’ll walk through:

- The structure of a monorepo using NX and Module Federation.

- How to host multiple applications in a single S3 bucket with CloudFront Functions for dynamic request routing.

- Git submodules for independently managing remote applications.

- A Docker-based deployment strategy for optimized builds and deployments.

Let’s dive into the key concepts and implementation details.

Why Use Module Federation?

Module Federation is a feature introduced in Webpack 5 that allows dynamic runtime loading of micro-frontends, providing teams with the ability to build and share independent codebases across multiple applications. In the context of NX, it enables projects to be divided into a “shell” or host application and multiple “remote” applications. This architecture brings numerous benefits, particularly when scaling large, distributed systems:

1. Independent Deployments: Each remote can be developed, deployed, and updated independently without affecting the host or other remotes. This allows teams to work autonomously while maintaining a shared ecosystem.

2. Improved Load Performance: Since remote applications are loaded dynamically at runtime, it avoids the need to bundle everything into a single large file. This reduces the initial load time for the shell application as only the necessary remotes are fetched as needed.

3. Seamless Code Sharing: Shared libraries between the host and remotes are essential for avoiding code duplication. Module Federation ensures that shared modules (like React, utility functions, or design systems) are only loaded once, even if multiple applications use them, improving both performance and consistency across micro-frontends.

4. Flexibility in Versioning: Each remote can use a different version of a shared dependency, which is resolved at runtime. This means that updates to one application don’t break others, and teams can adopt new versions of shared libraries at their own pace.

Benefits of Module Federation in a Micro-Frontend Architecture

In the context of large-scale micro-frontend architectures, the benefits of Module Federation are particularly compelling:

1. Reduced Build Times: By leveraging dynamic imports, Module Federation allows applications to be built in isolation. NX’s caching mechanism, combined with Module Federation, ensures that you only need to build the parts of the application that have changed. This can significantly reduce build and CI/CD pipeline times.

2. Enhanced Scalability: With each micro-frontend as an independent remote, different teams can scale their applications at their own pace. This architecture is highly conducive to distributed teams, as it allows individual teams to push features to production without having to coordinate with other teams working on the same application.

3. Decoupled Deployments: One of the biggest operational benefits of Module Federation is that remotes can be deployed independently of each other. If one remote fails during a deployment, it doesn’t affect the rest of the system, enhancing the overall resiliency of the platform.

4. Simplified Rollbacks: Since each micro-frontend can be independently versioned and deployed, rolling back a problematic change can be done quickly for just the affected remote application without requiring a rollback of the entire monorepo. This reduces downtime and limits the blast radius of errors.

1. Monorepo Architecture Using NX

NX is a powerful build system for monorepos, providing an integrated way to manage multiple applications and shared libraries. In our case, we use Module Federation to divide our project into a shell application and multiple remote applications. This approach allows different teams to work on different parts of the application while still ensuring a cohesive final product.

Project Structure

Our project is set up as a monorepo with NX, which means:

- There is a single package.json file for managing dependencies across all applications and libraries.

- The shell application serves as the host for loading remote applications dynamically using Module Federation.

- Remote applications are loaded on-demand, enabling independent deployment and ownership.

- Shared libraries such as UI components, auth services, and utils are placed in a common directory and are shared across both the shell and remote applications to avoid code duplication and maintain consistency.

Here’s an overview of the typical structure of such a monorepo:

/apps /shell-app # Shell application (host) /remote-app-1 # First remote application (e.g., user management) /remote-app-2 # Second remote application (e.g., marketplace) /libs /ui # Shared UI components /auth # Shared authentication logic /utils # Shared utility functions

By using shared libraries, we ensure that the shell and remote applications have access to the same components, reducing redundancy and ensuring uniformity in the user experience across the platform.

2. Using Git Submodules for Remote Applications

As remote applications grow, different teams may need to own and manage them independently. Git submodules offer a perfect solution to this by allowing each remote application to live in its own repository while still being part of the main monorepo.

Why Use Git Submodules?

- Independence: Each remote application can have its own version control history and lifecycle, enabling teams to work autonomously.

- Decoupling: Submodules ensure that changes to one remote don’t unnecessarily impact others.

- Easy Updates: Submodules can be updated or rolled back easily, and the main monorepo will always pull in the correct version of each remote.

Adding Submodules to the Monorepo

To add a remote application as a Git submodule, run the following command:

git submodule add https://github.com/your-org/remote-app-1.git apps/remote-app-1

This integrates the remote application’s repository into the monorepo without duplicating code.

Here’s a sample .gitmodules file that defines the paths for the remote submodules:

[submodule "apps/remote-app-1"]

path = apps/remote-app-1

url = https://github.com/your-org/remote-app-1.git

[submodule "apps/remote-app-2"]

path = apps/remote-app-2

url = https://github.com/your-org/remote-app-2.git

Each remote application is now treated as an individual project, but it can still be built and deployed as part of the monorepo.

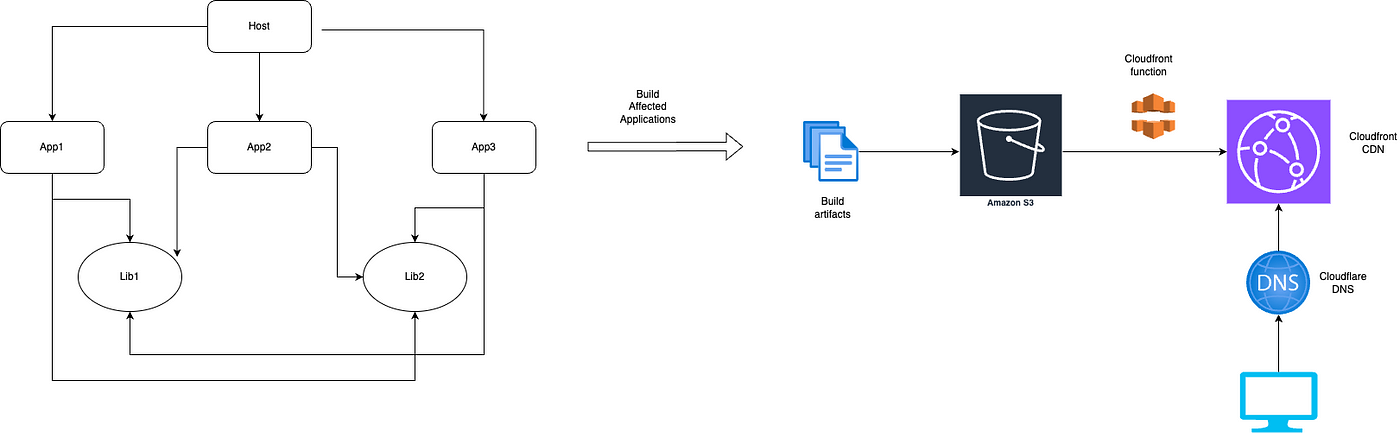

3. Optimized Deployment Strategy with NX and AWS

For large-scale monorepos, it’s important to optimize the build and deployment process. With NX, we can leverage the nx affected command to build only the applications and libraries affected by the latest changes. This dramatically reduces build times, especially when working with multiple applications.

NX Affected for Optimized Builds

By running the following command, NX will analyze the project dependencies and build only the affected applications:

npx nx affected --target=build

This ensures that we don’t waste time building unaffected parts of the monorepo.

Hosting Multiple Applications in a Single S3 Bucket

To efficiently serve multiple micro-frontends, we can host all applications in a single S3 bucket. By using CloudFront as the CDN, we can route incoming requests dynamically to the correct application based on the host or URI.

CloudFront Functions for Dynamic Routing

CloudFront Functions allow us to modify the incoming requests to map them to the correct application within the S3 bucket. Below is a function that routes requests to different applications based on the host header:

function handler(event) {

var request = event.request;

var uri = request.uri;

var host = request.headers.host.value;

// Define mappings for host headers to corresponding S3 paths

var hostMapping = {

'example1.com': '/app1/index.html',

'example2.com': '/app2/index.html'

};

// If the URI does not contain a file extension, map to the correct application

if (!uri.includes('.')) {

request.uri = hostMapping[host];

}

return request;

}

This function ensures that requests are routed to the correct application’s index.html, which is stored under different directories in the same S3 bucket.

4. Docker-Based Build and Deployment

We use Docker to containerize our build process, ensuring a consistent environment across all stages of deployment. Each remote application is built and deployed using a Dockerfile that handles everything from installing dependencies to syncing the output to S3.

Sample Dockerfile

Here’s a Dockerfile used for building and deploying the applications to S3:

FROM node:20-alpine3.20 AS builder

WORKDIR /app

# Install dependencies required for accessing AWS Secrets Manager

RUN apk add --no-cache python3 py3-pip aws-cli

# Environment variable for remote application URLs

ENV NX_FROM_DOCKER=true \

REMOTE_APP_1_URL=https://example.com/app1/remoteEntry.js \

REMOTE_APP_2_URL=https://example.com/app2/remoteEntry.js \

AWS_SECRET_NAME=my-app-secrets # The name of the secret in AWS Secrets Manager

# Install Node.js dependencies

COPY package*.json ./

RUN npm install -f

COPY . .

# Reset NX cache

RUN npx nx reset

# Build applications and set baseHref for correct asset paths

ARG APPLICATION

RUN for app in $(echo $APPLICATION | tr "," "\n"); do \

echo "Building application: $app"; \

npx nx build $app --baseHref='/app1/host/' --outputHashing='all'; \

done

# Sync built files to S3

RUN apk update && apk add --no-cache jq

# Copy entrypoint script

COPY entrypoint-prod.sh /entrypoint.sh

RUN chmod +x /entrypoint.sh

CMD ["/entrypoint.sh"]

This Dockerfile:

- Sets environment variables for each remote application’s remoteEntry.js path.

- Builds the affected applications using nx build and sets the correct baseHref to ensure resources are loaded correctly.

- Syncs the built files to S3.

entrypoint.sh for Syncing to S3

#!/bin/sh # Fetch AWS credentials from AWS Secrets Manager echo "Fetching AWS credentials from Secrets Manager..." SECRETS=$(aws secretsmanager get-secret-value --secret-id "$AWS_SECRET_NAME" --query SecretString --output text) # Extract values from the secret JSON (assuming AWS_SECRET_NAME stores a JSON with access_key, secret_key, and region) AWS_ACCESS_KEY_ID=$(echo $SECRETS | jq -r '.aws_access_key_id') AWS_SECRET_ACCESS_KEY=$(echo $SECRETS | jq -r '.aws_secret_access_key') AWS_DEFAULT_REGION=$(echo $SECRETS | jq -r '.aws_default_region') # Export the credentials as environment variables export AWS_ACCESS_KEY_ID export AWS_SECRET_ACCESS_KEY export AWS_DEFAULT_REGION # Sync the built files to S3 aws s3 sync dist/apps s3://$S3_BUCKET_NAME # Invalidate CloudFront cache aws cloudfront create-invalidation --distribution-id $CLOUDFRONT_DISTRIBUTION_ID --paths "/*"

The script ensures that the newly built applications are uploaded to S3 and invalidates the CloudFront cache to serve the latest assets.

5. Automation and Caching with AWS CloudFront

To ensure users always receive the latest version of the application, we invalidate the CloudFront cache after each deployment. This is done via the AWS CLI in our entrypoint.sh script:

aws cloudfront create-invalidation --distribution-id $CLOUDFRONT_DISTRIBUTION_ID --paths "/*"

This command forces CloudFront to pull the latest version of the assets from the S3 bucket, ensuring that no outdated files are served to the users.

Flow chart of deployment process

Conclusion

By using NX Module Federation, Git submodules, and a combination of AWS S3 and CloudFront, we’ve built a scalable, flexible architecture for managing and deploying multiple micro-frontend applications. This setup:

- Allows independent teams to manage their applications while still being part of a unified system.

- Optimizes build times with nx affected.

- Hosts multiple applications in a single S3 bucket using CloudFront Functions for dynamic routing.

- Automates deployment and caching with Docker and AWS tools.

This architecture ensures efficient and scalable deployments, making it ideal for large, distributed teams.

Loved this article?

Hit the like button

Share this article

Spread the knowledge

More from the world of CARS24

From Feedback to Visible Change: A Gentle Rhythm for Team Observability

Leaders spend less time firefighting and more time enabling, because patterns in the trend point to structural fixes.

Supercharge Your Gupshup Campaigns with Cloudflare workers

Deep linking third-party URL shortening services via Cloudflare bypasses limitations like restricted file hosting by leveraging Cloudflare Workers to dynamically serve necessary files.

Unlocking the Power of Personalisation in Automotive E-commerce

Personalisation is how we differentiate, build loyalty, and drive business growth. As customer expectations evolve, so must we: thinking boldly and designing responsibly.